Archive

Converting taxonomy field into cascading drop down fields using PnP Branding.JsLink

Managed metadata fields don’t provide the same look and feel as choice field type. It is quick clunky specially when there are a lot of terms displayed.

The requirement cropped up to display the managed metadata fields as drop downs. After googling for a while, stumbled on PnP sample Branding.JSLink solution which had an example how to achieve it. The solution is a no code sandboxed solution with different examples how to enrich fields. I wanted only the taxonomy to cascading drop down feature.

I copied the file TaxonomyOverrides.js and ManagedMetadata.js only into the “Style Library” library in my test SharePoint Site Collection. I modified the TaxonomyOverrides.js file to refer to the field “DocumentType” I wanted transformed in the fields section.

Type.registerNamespace(‘jslinkOverride’)

var jslinkOverride = window.jslinkOverride || {};

jslinkOverride.Taxonomy = {};jslinkOverride.Taxonomy.Templates = {

Fields: {

‘DocumentType’: {

‘NewForm’: jslinkTemplates.Taxonomy.editMode,

‘EditForm’: jslinkTemplates.Taxonomy.editMode

}

}

};jslinkOverride.Taxonomy.Functions = {};

jslinkOverride.Taxonomy.Functions.RegisterTemplate = function () {

// register our object, which contains our templates

SPClientTemplates.TemplateManager.RegisterTemplateOverrides(jslinkOverride.Taxonomy);

};

jslinkOverride.Taxonomy.Functions.MdsRegisterTemplate = function () {

// register our custom template

jslinkOverride.Taxonomy.Functions.RegisterTemplate();// and make sure our custom view fires each time MDS performs

// a page transition

var thisUrl = _spPageContextInfo.siteServerRelativeUrl + “Style Library/OfficeDevPnP/Branding.JSLink/TemplateOverrides/TaxonomyOverrides.js”;

RegisterModuleInit(thisUrl, jslinkOverride.Taxonomy.Functions.RegisterTemplate)

};if (typeof _spPageContextInfo != “undefined” && _spPageContextInfo != null) {

// its an MDS page refresh

jslinkOverride.Taxonomy.Functions.MdsRegisterTemplate()

} else {

// normal page load

jslinkOverride.Taxonomy.Functions.RegisterTemplate()

}

The DocumentType is a taxonomy field which has SharePoint OOB look and feel

After referencing the files TaxonomyOverrides.js and ManagedMetadata.js on the editform page of the document library using a script editor webpart using tags as below.

/Projects/EDRMS/Style%20Library/OfficeDevPnP/Branding.JSLink/Generics/ManagedMetadata.js

/Projects/EDRMS/Style%20Library/OfficeDevPnP/Branding.JSLink/TemplateOverrides/TaxonomyOverrides.js

Voila, the taxonomy field is transformed into cascading drop downs.

from reshmeeauckloo http://bit.ly/2qwao8c

|

Migrate data from one SQL table to another database table

Sometimes you may have to migrate data from one SQL database to another SQL database on another environment, e.g. Live to test and dev environment and vice versa.

You may argue the easiest solution is a migration of the backup of database. Unfortunately it does not work well in environments where data in Live is classified as sensitive and are not allowed to be copied across into a dev / test machine where the security is not as strict as a live machine. There are some data from live which are not classified as sensitive or restricted and might have to be migrated to Test and DEV for testing or development purposes, for example list of values.

A copy of the data can be exported as a csv or dat file from the SQL Table using “select * from table_value” statement from source database.

The data can be bulk imported into a temp table and MERGE statement can be used to insert missing records and update records. The sample script which you can use is below.

The script can be downloaded from TechNet.

from reshmeeauckloo http://bit.ly/2qsdHJC

|

Delete all list Item and file versions from site and sub sites using CSOM and PowerShell

The versions property is not available from client object model on the ListItem class as with server object model. I landed on the article SharePoint – Get List Item Versions Using Client Object Model that describes how to get a list item versions property using the method GetFileByServerRelativeUrl by passing the FileUrl property. The trick is to build the list item url as “sites/test/Lists/MyList/30_.000” where 30 is the item id for which the version history needs to be retrieved. Using that information I created a PowerShell to loop through all lists in the site collection and sub sites to delete all version history from lists.

The script below targets a SharePoint tenant environment.

Please note that I have used script Load-CSOMProperties.ps1 from blog post Loading Specific Values Using Lambda Expressions and the SharePoint CSOM API with Windows PowerShell to help with querying object properties like Lambda expressions in C#. The lines below demonstrate use of the Load-CSOMProperties.ps1 file which I copied in the same directory where the script DeleteAllVersionsFromListItems.ps1 is.

- Load the Load-CSOMProperties.ps1 file

Set-Location $PSScriptRoot

$pLoadCSOMProperties=(get-location).ToString()+”\Load-CSOMProperties.ps1″

. $pLoadCSOMProperties

- Retrieve ServerRelativeURL property from file object

Load-CSOMProperties -object $file -propertyNames @(“ServerRelativeUrl”);

You can download the script from technet.

from reshmeeauckloo http://bit.ly/2ozAHFb

|

Rebuild and Reorganize indexes on all tables in MS database

As part of database maintenance, indexes on databases have to be rebuilt or reorganised depending on how fragmented the indexes are. From the article Reorganize and Rebuild Indexes, the advice is to reorganise index if avg_fragmentation_in_percent value is between 5 and 30 and to rebuild index if it is more than 30%.

The script below queries all fragmented indexes more than 5 percent and using a cursor a loop is performed on the results to rebuild or reorganise indexes depending on the percentage of fragmentation using dynamic SQL, i.e.

The script can be downloaded from technet gallery , i.e. if avg_fragmentation_in_percent value is between 5 and 30 then reorganise else rebuild.

declare @tableName nvarchar(500)

declare @indexName nvarchar(500)

declare @indexType nvarchar(55)

declare @percentFragment decimal(11,2)

declare FragmentedTableList cursor for

SELECT OBJECT_NAME(ind.OBJECT_ID) AS TableName,

ind.name AS IndexName, indexstats.index_type_desc AS IndexType,

indexstats.avg_fragmentation_in_percent

FROM sys.dm_db_index_physical_stats(DB_ID(), NULL, NULL, NULL, NULL) indexstats

INNER JOIN sys.indexes ind ON ind.object_id = indexstats.object_id

AND ind.index_id = indexstats.index_id

WHERE

-- indexstats.avg_fragmentation_in_percent , e.g. >30, you can specify any number in percent

indexstats.avg_fragmentation_in_percent > 5

AND ind.Name is not null

ORDER BY indexstats.avg_fragmentation_in_percent DESC

OPEN FragmentedTableList

FETCH NEXT FROM FragmentedTableList

INTO @tableName, @indexName, @indexType, @percentFragment

WHILE @@FETCH_STATUS = 0

BEGIN

print 'Processing ' + @indexName + 'on table ' + @tableName + ' which is ' + cast(@percentFragment as nvarchar(50)) + ' fragmented'

if(@percentFragment<= 30)

BEGIN

EXEC( 'ALTER INDEX ' + @indexName + ' ON ' + @tableName + ' REBUILD; ')

print 'Finished reorganizing ' + @indexName + 'on table ' + @tableName

END

ELSE

BEGIN

EXEC( 'ALTER INDEX ' + @indexName + ' ON ' + @tableName + ' REORGANIZE;')

print 'Finished rebuilding ' + @indexName + 'on table ' + @tableName

END

FETCH NEXT FROM FragmentedTableList

INTO @tableName, @indexName, @indexType, @percentFragment

END

CLOSE FragmentedTableList

DEALLOCATE FragmentedTableList

from reshmeeauckloo http://bit.ly/2mHUNQb

|

SQL script batch execution using sqlcmd in PowerShell

There is often a mismatch between needs of the development team (multiple discreet T-SQL files for separate concerns) and the release team (the requirement for one step automated deployment) . The script bridges the requirement by using sqlcmd.exe to run a batch of SQL scripts.

A text file is used listing all sql files that need to run in a particular order to avoid errors which may occur if there are dependencies between the scripts. Instead of using a text file a number can be prefixed to the scripts based on the order they need to run.

The script expects two parameters –

- Path of folder containing the set of T-SQL files (and the manifest file, see below)

- Connection string

The script can be downloaded from technet gallery.

## Provide the path name of the SQL scripts folder and connnection string

##.\SQLBatchProcessing.ps1 -SQLScriptsFolderPath "C:\Sql Batch Processing\SQLScripts" -ConnectionString "DEV-DB-01"

Param(

[Parameter(Mandatory=$true)][String]$ConnectionString ,

[Parameter(Mandatory=$true)][String]$SQLScriptsFolderPath

)

Set-ExecutionPolicy -ExecutionPolicy:Bypass -Force -Confirm:$false -Scope CurrentUser

Clear-Host

#check whether the SQL Script Path exists

$SQLScriptsPath = Resolve-Path $SQLScriptsFolderPath -ErrorAction Stop

#a manifest file will exisit in the SQL scripts folder detailing the order the scripts need to run.

$SQLScriptsManifestPath = $SQLScriptsFolderPath + "\Manifest.txt"

#Find out whether the manifest file exists in the the SQL Scripts folder

$SQLScriptsManifestPath = Resolve-Path $SQLScriptsManifestPath -ErrorAction Stop

#if manifest file found iterate through each line , validate if corresponding SQL script exists in file before running each of them

Get-Content $SQLScriptsManifestPath | ForEach-Object {

$SQLScriptsPath = $SQLScriptsFolderPath + "\" + $_.ToString()

Resolve-Path $SQLScriptsPath -ErrorAction Stop

}

$SQLScriptsLogPath = $SQLScriptsFolderPath + "\" + "SQLLog.txt"

Add-Content -Path $SQLScriptsLogPath -Value "***************************************************************************************************"

Add-Content -Path $SQLScriptsLogPath -Value "Started processing at [$([DateTime]::Now)]."

Add-Content -Path $SQLScriptsLogPath -Value "***************************************************************************************************"

Add-Content -Path $SQLScriptsLogPath -Value ""

Get-Content $SQLScriptsManifestPath | ForEach-Object {

$SQLScriptsPath = $SQLScriptsFolderPath + "\" + $_.ToString()

$text = "Running script " + $_.ToString();

Add-Content -Path $SQLScriptsLogPath -Value $text

sqlcmd -S "DEV-DB-01" -i $SQLScriptsPath | Out-File -Append -filepath $SQLScriptsLogPath

}

Add-Content -Path $SQLScriptsLogPath -Value "***************************************************************************************************"

Add-Content -Path $SQLScriptsLogPath -Value "End processing at [$([DateTime]::Now)]."

Add-Content -Path $SQLScriptsLogPath -Value "***************************************************************************************************"

Add-Content -Path $SQLScriptsLogPath -Value ""

from reshmeeauckloo http://bit.ly/2mntBWD

|

Create DevTest Labs in Azure

Azure DevTest Labs is available in UK South and UK West as from December 2016, in addition to the other 21 regions it has supported.

The steps to create the DevTest lab are

- Login to Azure portal as administrator

- Click the green + New menu

- Type DevTest Labs into the search box

- Select DevTestLabs from the results page

- Click on Create from the Description page.

The advantages using DevTest Labs as mentioned from the Description page are

DevTest Labs helps developers and testers to quickly create virtual machines in Azure to deploy and test their applications. You can easily provision Windows and Linux machines using reusable templates while minimizing waste and controlling cost.

- Quickly provision development and test virtual machines

- Minimize waste with quotas and policies

- Set automated shutdowns to minimize costs

- Create a VM in a few clicks with reusable templates

- Get going quickly using VMs from pre-created pools

- Build Windows and Linux virtual machines

- Enter the lab name, select the subscription, select location North Europe, tick the Pin to Dashboard tick box and alternatively update the Auto-shutdown schedule.

- Click on Create.

- The dashboard is displayed with a new tile showing that the DevTest Lab is being deployed.

- The DevTest Lab page is displayed once deployment of the DevTest Lab is completed.

Instead of using the Portal, PowerShell can be used to create Azure DevTest Lab. The GitHub repository http://bit.ly/2jhNQ4t an example how it can be achieved.

The repository has a readme file, a deployment template with a corresponding parameters file and a PowerShell script to execute the deployment.

The Readme file provides a description of the resources created.

About the resources created in the Demo Lab:

The ARM template creates a demo lab with the following things:

* It sets up all the policies and a private artifact repo.

* It creates 3 custom VM images/templates.

* It creates 4 VMs, and 3 of them are created with the new custom VM images/templates.

To run the PowerShell script the subscriptionId is required. This can be obtained from the cmdlet Login-AzureRmAccount.

The PowerShell is run as below

.\ProvisionDemoLab.ps1 -SubscriptionId 41111111-1111-1111-1111-111111111111 -ResourceGroupLocation northeurope -ResourceGroupName RTestLab

The script produces the following results.

From the portal , the result shows the 4 vms.

The repositories have been created as well.

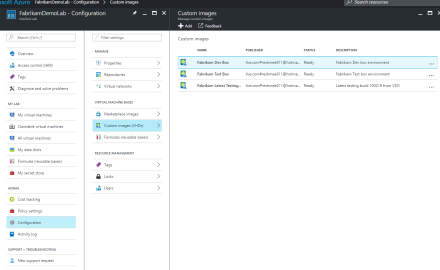

Custom images of the running machines have been created as well.

There are artifacts ready to be used though none are applied yet to the virtual machines.

You can create your own templates/parameters files in the Portal by creating a new resource and exporting instead of executing the configuration in the GitHub repository.

from reshmeeauckloo http://bit.ly/2j4Ys94

|

Unable to update User Profile Property due to Policy Settings set to Disabled

The web service userprofileservice <site url>/_vti_bin/userprofileservice.asmx has to be used to update user profile properties for other users in SharePoint 2013 and SharePoint Online.

Even though the HTTP status code response returned was 200 which means successful, the user profile property was blank when the user properties was queried using the REST api method sp.userprofiles.peoplemanager/getpropertiesfor.

<site url>/_api/sp.userprofiles.peoplemanager/getpropertiesfor(@v)?@v=’i%3A0%23.f%7Cmembership%7CfirstName.LastName%40arteliauk.onmicrosoft.com’

The user profile property updated was PictureUrl , however it was showing as null

<d:PictureUrl m:null="true" />

After spending a couple of hours trying to figure out why the use profile property was not showing, I decided to review the user profiles properties for Picture property and how it is different from the other user profile properties, e.g. Job Title which was showing the updated value.

The Policy Settings on Picture property was disabled for some reason.

Disabled from Manage user profile policies in SharePoint admin center meant

The property or feature is visible only to the User Profile Service administrator. It does not appear in personalized sites or Web Parts, and it cannot be shared.

Also it meant the property was not visible when queried by REST API.

After updating the policy settings to Optional, the pictureURL property for the user is being returned by the REST API query and showing up in SharePoint sites as well.

<d:PictureUrl>siteurl/StaffDetail/js.jpg?t=63579640559</d:PictureUrl>

from reshmeeauckloo http://bit.ly/2hPZfdw

|

Unable to change Content Type of document in Library

I was trying to remove a content type from a document library which was associated with multiple content types. As a first action, I identified all documents tagged with the content type to be removed and tried to update them with another content type. However some documents were still showing the old content type despite saving it with the new content type by updating the [Content Type] field.

I tried removing the document and adding the document back to the document library, unfortunately it was still referencing the old content type.

The solution that worked for me was to open the document in desktop office and open the “Advanced Properties”.

Find and select the property ContentTypeId and click on Delete.

After deleting the ContentTypeId property I was able to update the content type property of the document and eventually remove the old content type from the document library.

from reshmeeauckloo http://bit.ly/2hZNhvL

|

Create Dev/Test SharePoint 2013 environment in Azure

Azure has a trial image to build either SharePoint 2013 HA farm or SharePoint 2013 Non-HA farm.

When trying to create SharePoint 2013 Non-HA farm, I was stuck at step “Choose storage account type” with the message “Loading pricing…”.

Following SharePoint Server 2016 dev/test environment in Azure, I managed to created a SharePoint 2013 environment in Azure running PowerShell commands.

There are three major phases to setting up this dev/test environment:

- Set up the virtual network and domain controller (ad2013VM).I followed all steps described in Phase 1: Deploy the virtual network and a domain controller to set up the virtual network and domain controller

- Configure the SQL Server computer (sql2012VM).I followed all steps from Phase 2: Add and configure a SQL Server 2014 virtual machine to create the SQL server computer with few changes to the PowerShell script to create a SQL2012R2 machine.

- Configure the SharePoint server (sp2013VM). I followed all steps from Phase 3: Add and configure a SharePoint Server 2016 virtual machine with few changes to the script to create a SharePoint 2013 virtual machine.

Configure the SQL Server computer (sql2012VM).

I needed to get the name of SQL 2012 SP2 Azure image offer. I can list all SQL Azure image offers using the cmdlet Get-AzureRMImageOffer.

Get-AzureRmVMImageOffer -Location "westeurope" -PublisherName "MicrosoftSQlServer"

The following SQL Image Offers are available

Offer ----- SQL2008R2SP3-WS2008R2SP1 SQL2008R2SP3-WS2012 SQL2012SP2-WS2012 SQL2012SP2-WS2012R2 SQL2012SP3-WS2012R2 SQL2012SP3-WS2012R2-BYOL SQL2014-WS2012R2 SQL2014SP1-WS2012R2 SQL2014SP1-WS2012R2-BYOL SQL2014SP2-WS2012R2 SQL2014SP2-WS2012R2-BYOL SQL2016-WS2012R2 SQL2016-WS2012R2-BYOL SQL2016-WS2016 SQL2016-WS2016-BYOL SQL2016CTP3-WS2012R2 SQL2016CTP3.1-WS2012R2 SQL2016CTP3.2-WS2012R2 SQL2016RC3-WS2012R2v2 SQL2016SP1-WS2016 SQL2016SP1-WS2016-BYOL SQLvNextRHEL

I was interested in SQL 2012 SP2 Standard version. Fortunately the Azure Image Offer Names are intuitive, e.g. Name SQL2012SP2-WS2012R2 means windows server 2012 R2 virtual machine with SQL Server 2012 SP2 installed.

I also needed the SKU value of the SQL 2012 SP2 using the cmdlet Get-AzureRmVMImageSKU

Get-AzureRmVMImageSKU -Location "westeurope" -PublisherName "MicrosoftSQlServer" -Offer SQL2012SP2-WS2012R2|format-table Skus

The following SKUs for SQL2012SP2-WS2012R2 are available

Skus ---- Enterprise Enterprise-Optimized-for-DW Enterprise-Optimized-for-OLTP Standard Web

The changes from the original script are on the following lines

- line 21: “sql2012VM” stored in variable $vmName

- line 23: $vnet=Get-AzureRMVirtualNetwork -Name “SP2013Vnet” -ResourceGroupName $rgName

- line 40 : $vm=Set-AzureRMVMSourceImage -VM $vm -PublisherName MicrosoftSQLServer -Offer SQL2012SP2-WS2012R2 -Skus Standard -Version “latest”

Configure the SharePoint server (sp2013VM).

Similarly to creating the SQL virtual machine, I needed the Azure Image Offer Name for SharePoint 2013.

The available SharePoint Azure Image offers for Microsoft SharePoint can be retrieved using the cmdlet below.

Get-AzureRmVMImageOffer -Location "westeurope" -PublisherName "MicrosoftSharePoint"

Only one result “MicrosoftSharePointServer” is returned.

To get the available SKUs for “MicrosoftSharePointServer”, the cmdlet below can be run.

Get-AzureRmVMImageSKU -Location "westeurope" -PublisherName "MicrosoftSharePointServer" |format-table Skus

Two results are returned : “2013” and “2016”. I am interested in the “2013” value which refers to the Microsoft SharePoint Server 2013 version.

The changes from the original script are on the following lines

- line 18: $vmName=“sp2013VM”

- line 26:$vnet=Get-AzureRMVirtualNetwork -Name “SP2013Vnet” -ResourceGroupName $rgName

- line 34: $skuName=“2013”

The end result of the PowerShell scripts is a resource group with the virtual machines (adVm, sp2013Vm and sql2012VM), network interfaces, availability sets, storage account and public IP addresses to enable SharePoint 2013 to run in Azure VMs.

from reshmeeauckloo http://bit.ly/2ggfX4H

|

Import Configuration Data to CRM using Microsoft.Xrm.Data.PowerShell

CRM Dynamics data can be exported using the data migration tool by first generating the data schema file and using the later to export selected entities and fields. The same data migration tool can be used to import data into other environments. However it is still a manual process.

I have written a script using Microsoft.Xrm.Data.PowerShell module in PowerShell to automate the data import. The zip generated by the export needs to be unzipped so that the path of the files can be passed to the method called to import the data.

The script can be downloaded from TechNet gallery. Please note that the script has been tested with limited data so might require changes depending on the data being imported.

Call the method Import-ConfigData as in the snippet below.

Add-type -Path ".\Assemblies\Microsoft.Xrm.Tooling.CrmConnectControl.dll" Add-Type -Path ".\Assemblies\Microsoft.Xrm.Tooling.Connector.dll" import-module ".\Microsoft.Xrm.Data.PowerShell\Microsof t.Xrm.Data.PowerShell.psm1" $crmOrg = New-Object ` -TypeName Microsoft.Xrm.Tooling.Connector.CrmServiceClient ` -ArgumentList ([System.Net.CredentialCache]::DefaultNetworkCredentials), ([Microsoft.Xrm.Tooling.Connector.AuthenticationType]::AD) , $serverName , $serverPort , $organizationName , $False , $False , ([Microsoft.Xrm.Sdk.Discovery.OrganizationDetail]$null) $dataFilePath = "\PkgFolder\Configuration Data\data.xml" $dataSchemaFilePath = "\PkgFolder\Configuration Data\data_schema.xml" Write-Output "Begin import of configuration data..." Import-ConfigData -dataFilePath $dataFilePath -dataSchemaFilePath $dataSchemaFilePath -crmOrg $crmOrg Write-Output "End import of configuration data..."

First initialise the crm connection object of type Microsoft.Xrm.Tooling.Connector.CrmServiceClient stored in the variable $crmOrg above.

Pass the following parameters to the method.

datafilePath: Path of the file data.xml

crmOrg : crm connection object

dataschemafilepath: Path of the file dataschema.xml

from reshmeeauckloo http://bit.ly/2fvBhT2

|

You must be logged in to post a comment.